【Smart Mode】【Flowchart Mode】How to Start Group's Tasks | Web Scraping Tool | ScrapeStorm

Abstract:This tutorial will show you how to start group's tasks. No Programming Needed. Visual Operation. ScrapeStormFree Download

When the user scrapes the data, sometimes it is necessary to start a lot of tasks to scrape, and it takes a lot of time to start the task one by one. In order to save time, we have developed Group’s tasks, you can select the group to start the task in batches.

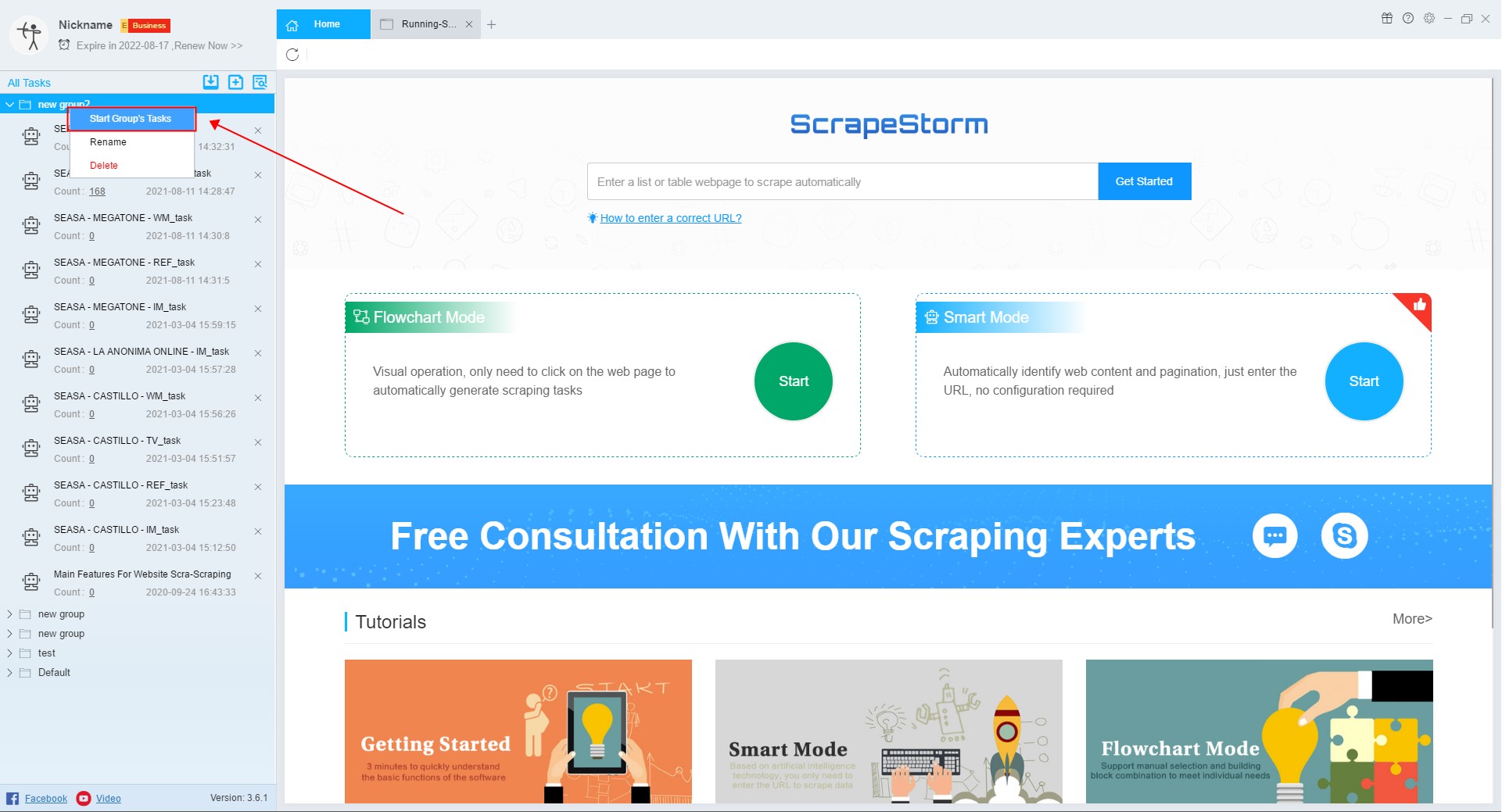

You can put the tasks you need to scrape into a group, then expand the group, right click and select “Start Group’s Tasks”, as shown below:

You need to expand the group to start group’s tasks.

If you do not open the group using this feature, you will get the following error:

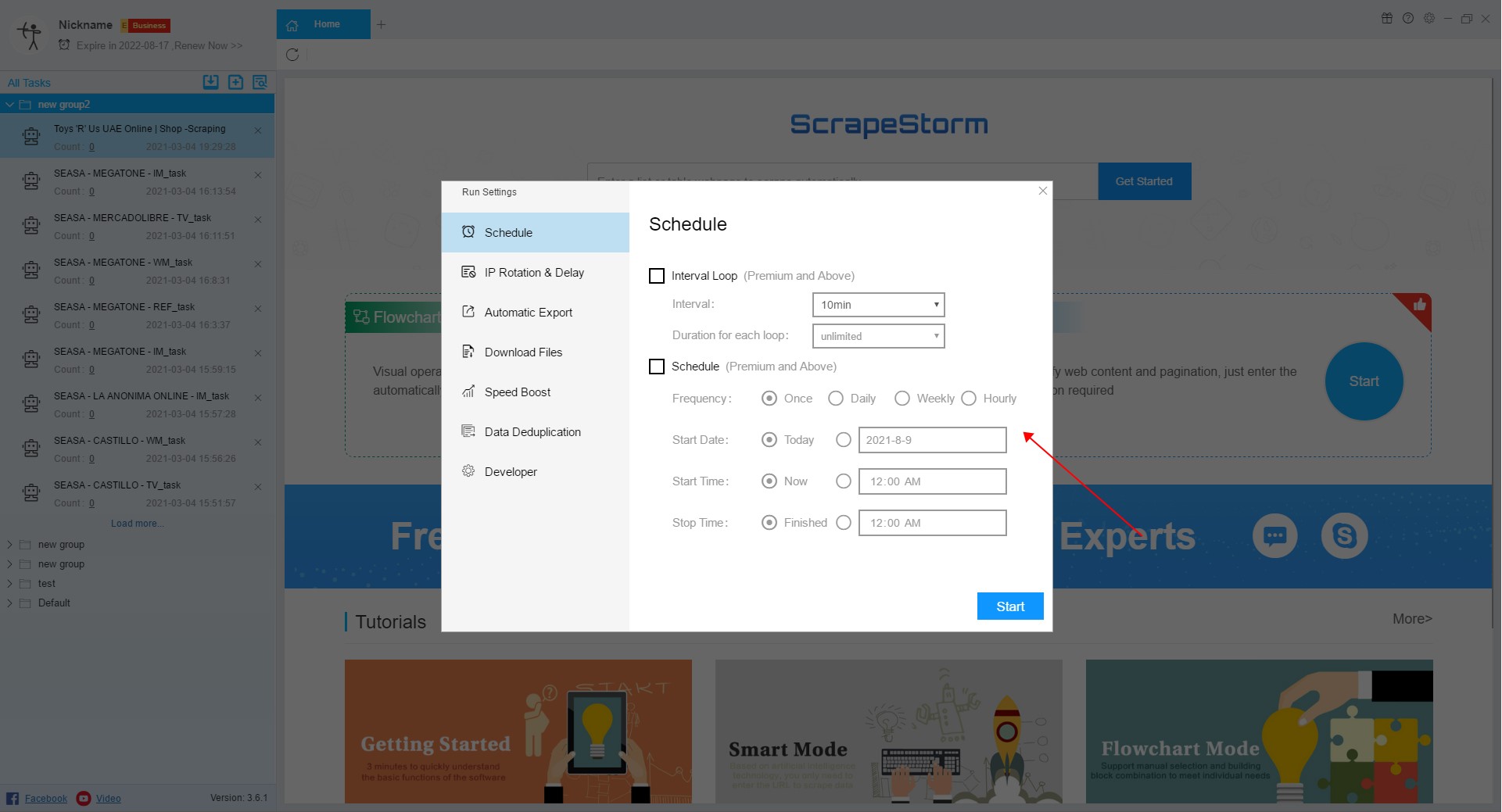

After the group’s tasks are started, the run settings will pop up, and all the settings made at the run settings will be set in each task in the group.

Click here to learn more about how to configure the scraping task.

Click the “Start” button to start the task, the tasks in the group will start running one by one in order.

In addition, if you check the Speed Boost Engine at this time, it will only occupy one resource.

If you want to pause or stop while scraping a task, you can click the “Pause/Stop” button on the page directly while the task is running.

When you click “Stop”, the task will stop and then scrape the next task directly. If you click “Pause”, all the tasks will be paused at this time, and the tasks will not run until you click “Resume”.

If you need to stop the entire group tasks, you can select the group, then right click to stop.

P.S. If you need to view the data after scraping the task, you can view it in each task. Currently, the data in the group is not supported for batch export.