Data Filtering | Web Scraping Tool | ScrapeStorm

Abstract:Data filtering is the process of extracting data that meets certain conditions from a data set or excluding unnecessary data. ScrapeStormFree Download

ScrapeStorm is a powerful, no-programming, easy-to-use artificial intelligence web scraping tool.

Introduction

Data filtering is the process of extracting data that meets certain conditions from a data set or excluding unnecessary data. This process is widely used as a preprocessing for data analysis and machine learning, and is of great significance for improving data accuracy and efficiency.

Applicable Scene

Improve data quality by removing inaccurate, duplicate, and missing data. Develop targeted marketing strategies by focusing on specific customer segments. Filter data from IoT sensors to detect and respond only to events that meet specific conditions. Extract data points that fall outside of normal ranges and identify anomalous behavior.

Pros: Improve the accuracy of analytical results and models by eliminating cluttered and irrelevant data. By extracting only necessary information from large amounts of data, you can optimize the use of computing resources and reduce processing time. By extracting data based on specific conditions, you can perform better analysis.

Cons: Filtering may lose useful information. In particular, if the conditions are too strict, important data may be excluded. Filtering data according to certain criteria may introduce bias into the data. This may lead to bias in analysis results and models. Filtering with complex conditions increases computational cost and time.

Legend

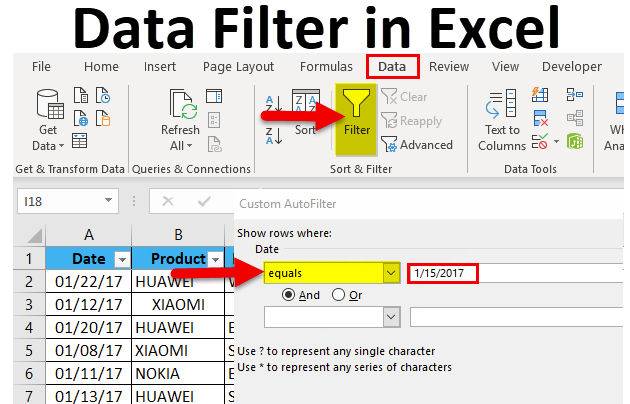

1. Data filter in Excel.

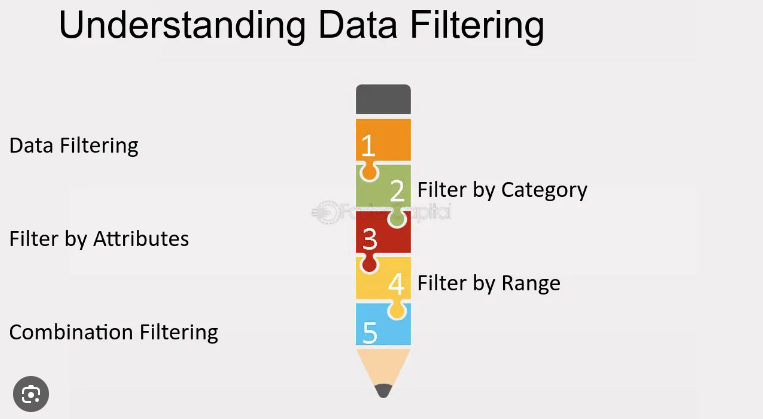

2. Understanding data filtering.

Related Article

Reference Link

https://www.indeed.com/career-advice/career-development/what-is-data-filtering