Data Standardization | Web Scraping Tool | ScrapeStorm

Abstract:Data standardization is the process of converting data with different measurement units, scales, and data formats into a unified, standard format. ScrapeStormFree Download

ScrapeStorm is a powerful, no-programming, easy-to-use artificial intelligence web scraping tool.

Introduction

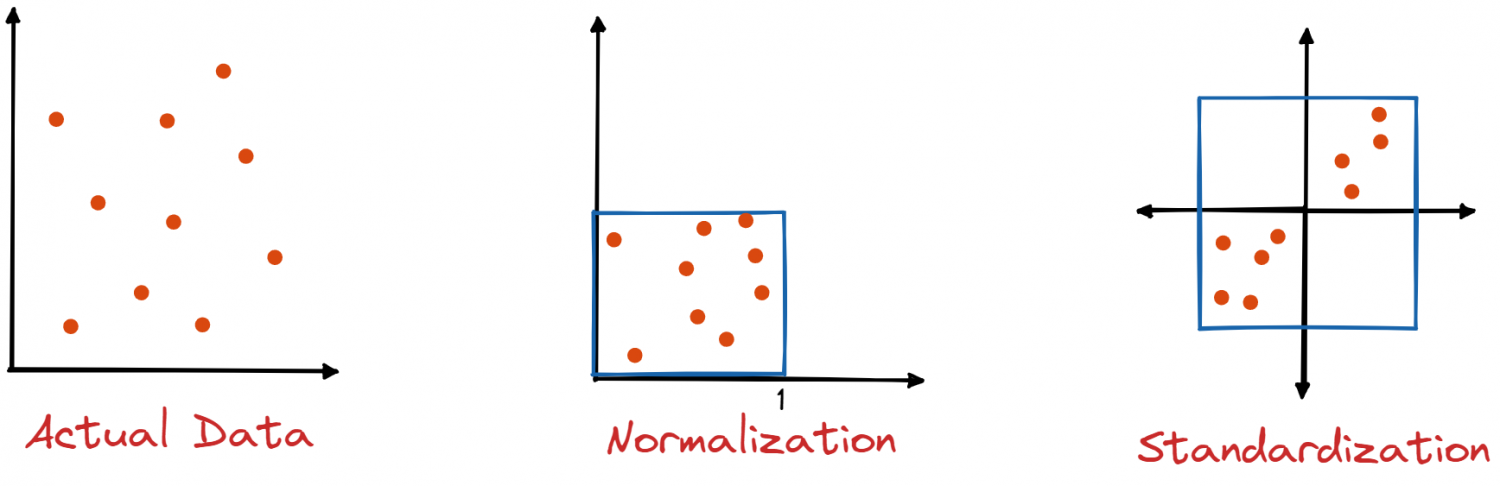

Data standardization is the process of converting data with different measurement units, scales, and data formats into a unified, standard format. Normalizing data typically involves scaling the data to a certain range (for example, between 0 and 1) or using a certain standard distribution (mean 0, standard deviation 1).

Applicable Scene

Data standardization is commonly used in data mining, machine learning, statistical analysis, and data integration. This ensures efficient comparison and integration between different data sources and data types.

Pros: Data standardization eliminates data inconsistencies and confusion, making data easier to understand and analyze. It also helps improve data quality, reduce errors, and improve model performance.

Cons: In some cases, excessive data normalization can lead to information loss or reduced data interpretability. Therefore, the standardization process must be done carefully.

Legend

1. Actual data, Normalization and Data standardization.

2. Data standardization.

Related Article

Reference Link

https://www.sisense.com/glossary/data-standardization/

https://www.reltio.com/glossary/data-quality/what-is-data-standardization/

https://www.kdnuggets.com/2020/04/data-transformation-standardization-normalization.html