Data workflow | Web Scraping Tool | ScrapeStorm

Abstract:Data workflow refers to the entire process of data collection, input, processing, manipulation, and output. ScrapeStormFree Download

ScrapeStorm is a powerful, no-programming, easy-to-use artificial intelligence web scraping tool.

Introduction

Data workflow refers to the entire process of data collection, input, processing, manipulation, and output. This process involves data collection, input, processing, manipulation, and output, and can be understood as the flow of data in a system.

Applicable Scene

Data workflow is suitable for scenarios that require processing and analyzing large amounts of data. It can help enterprises and organizations standardize and optimize data processing processes, improve data processing efficiency and quality, and thus better support business operations and development.

Pros: Clear structure, high fault tolerance, suitable for processing big data, easy to build on cheap machines, and easy to compare with computer processing.

Cons: The level of abstraction is low, not suitable for complex processes, the description of the data processing process is not detailed, and manual drawing is cumbersome.

Legend

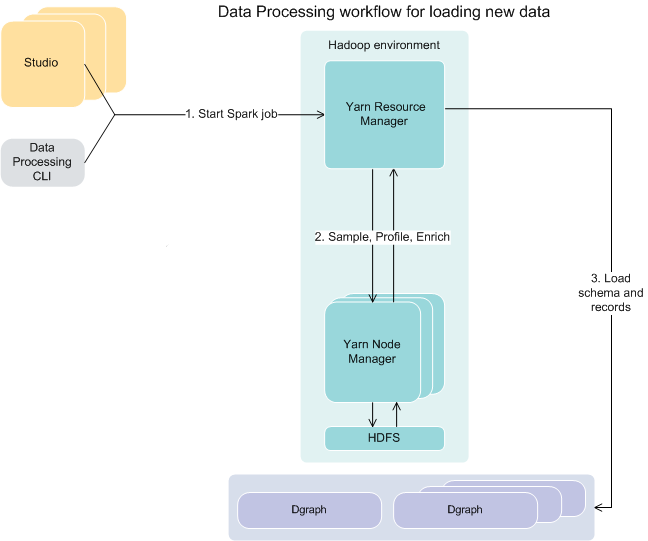

1. Data workflow.

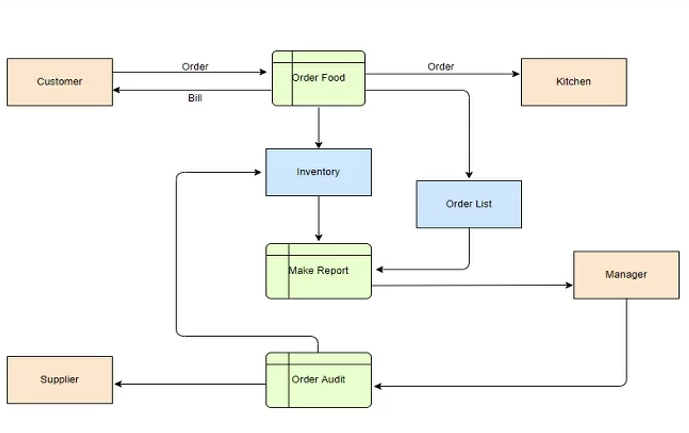

2. Data workflow.