Database Normalization | Web Scraping Tool | ScrapeStorm

Abstract:Database Normalization is a set of rules used in relational database design to organize data and reduce redundancy and dependency. ScrapeStormFree Download

ScrapeStorm is a powerful, no-programming, easy-to-use artificial intelligence web scraping tool.

Introduction

Database Normalization is a set of rules used in relational database design to organize data and reduce redundancy and dependency. It ensures data consistency and integrity while optimizing storage efficiency by decomposing data into multiple related tables and defining relationships between tables.

Applicable Scene

It is suitable for scenarios that require strict data consistency, high transaction processing performance, and complex queries, such as financial systems, enterprise resource planning (ERP), and e-commerce platforms.

Pros: Reduce data redundancy and storage costs; eliminate update, insertion and deletion anomalies to improve data consistency; simplify table structure and improve maintenance efficiency.

Cons: Complex queries require frequent associations with multiple tables, which may result in performance degradation; improper design may increase development complexity, and it is necessary to balance the paradigm level with actual needs.

Legend

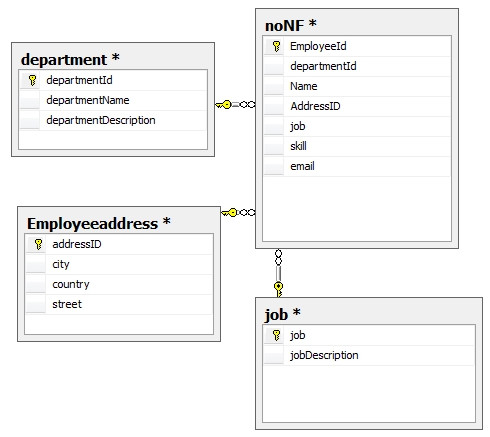

1. Database Normalization overview.

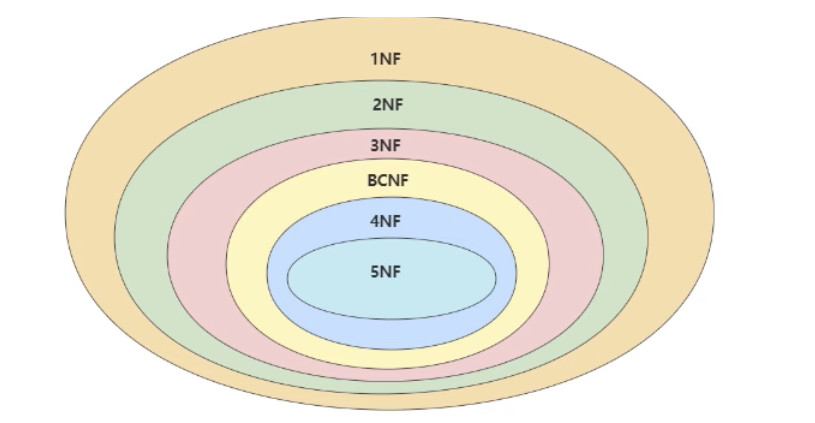

2. Database Normalization.

Related Article

Reference Link

https://en.wikipedia.org/wiki/Database_normalization

https://www.geeksforgeeks.org/introduction-of-database-normalization/

https://www.techtarget.com/searchdatamanagement/definition/normalization