【Flowchart Mode】Introduction to the task editing interface

Abstract:This article will show you introduction to the task editing interface in Flowchart Mode. ScrapeStormFree Download

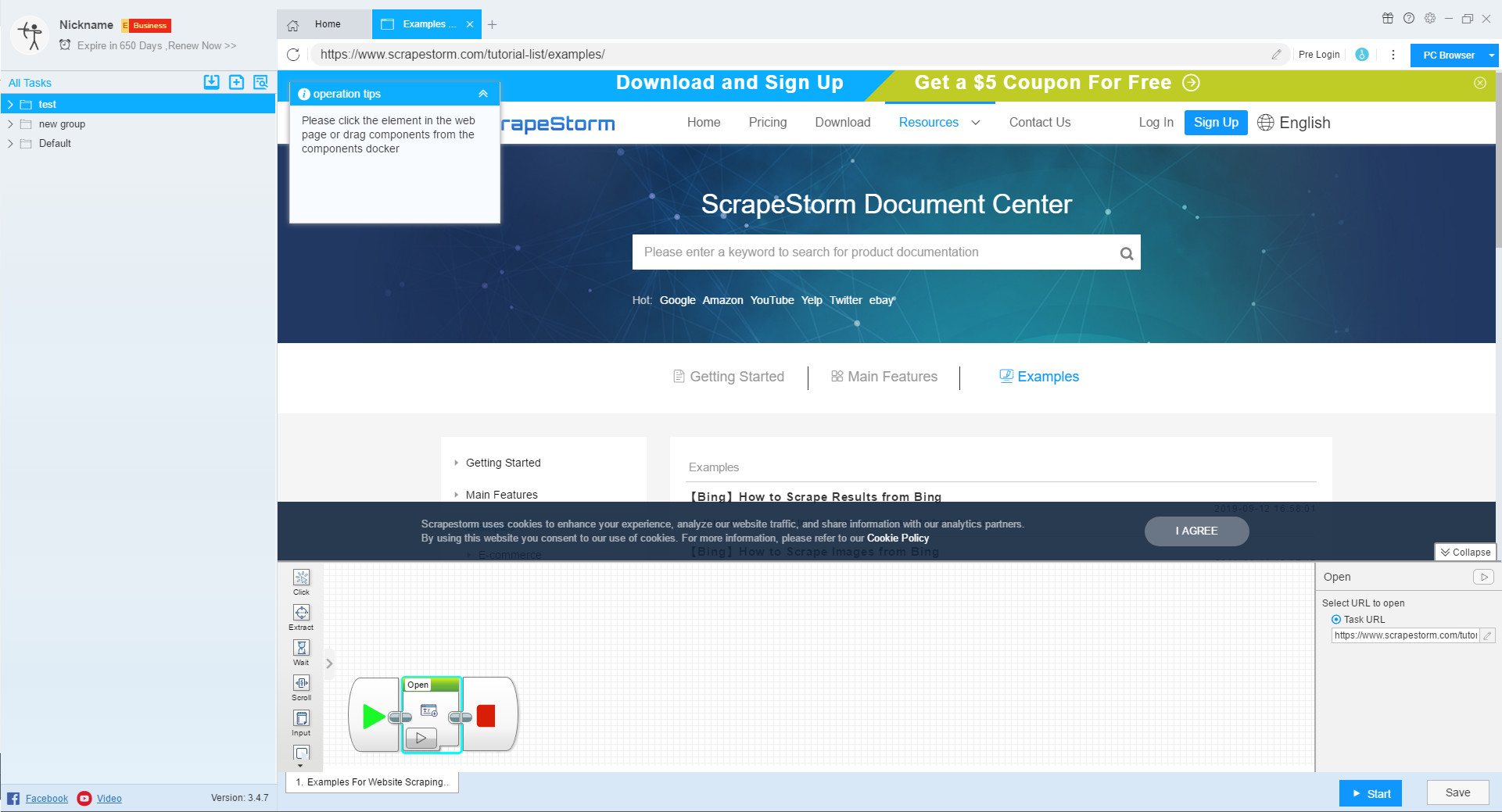

After the new flowchart mode task is created, the page will jump to the task editing interface. This tutorial will show you how to set the task in the flowchart mode task editing interface.

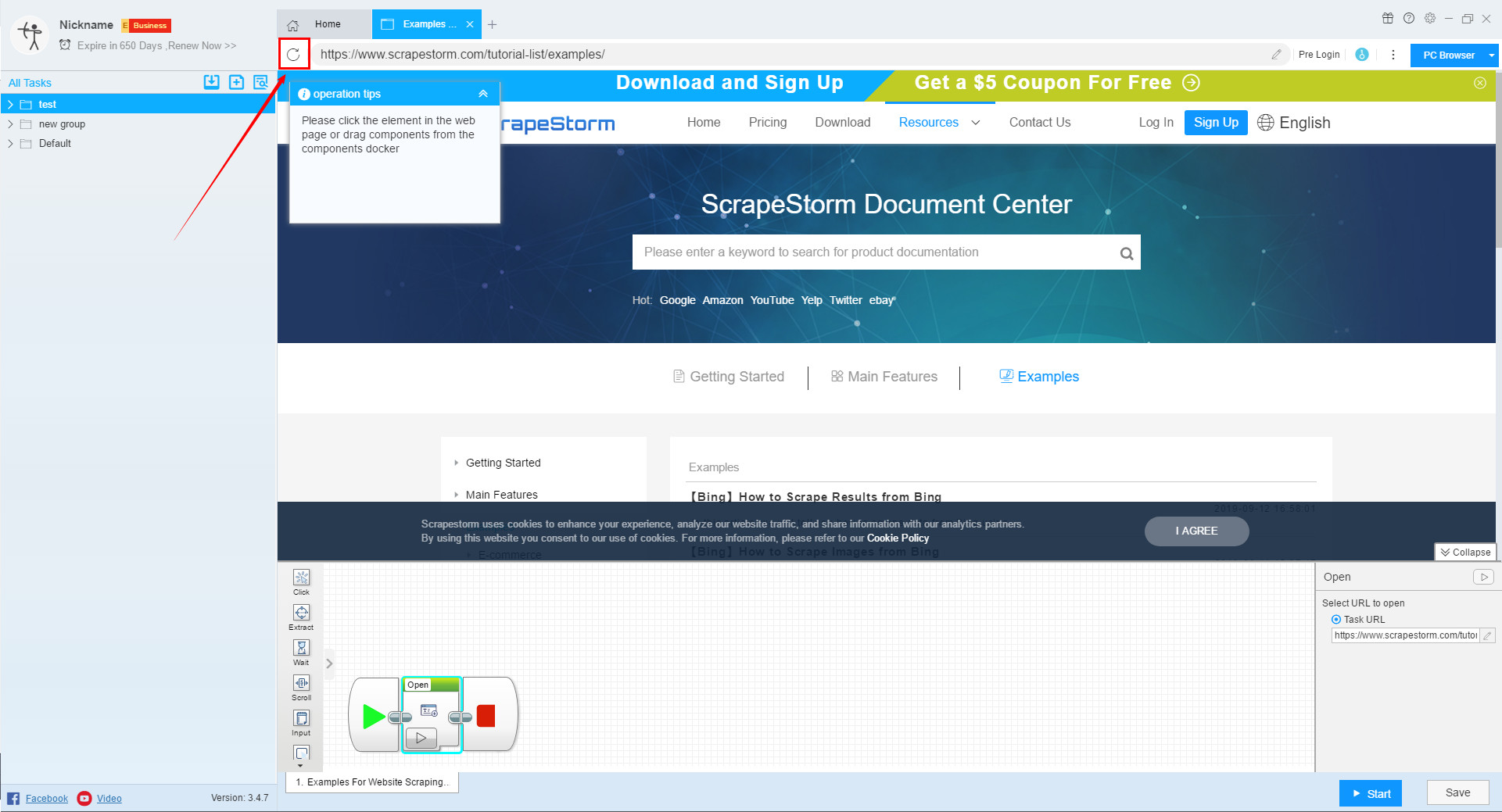

1. Refresh

Sometimes you may encounter the situation that the page can’t be loaded, and then you can refresh the page.

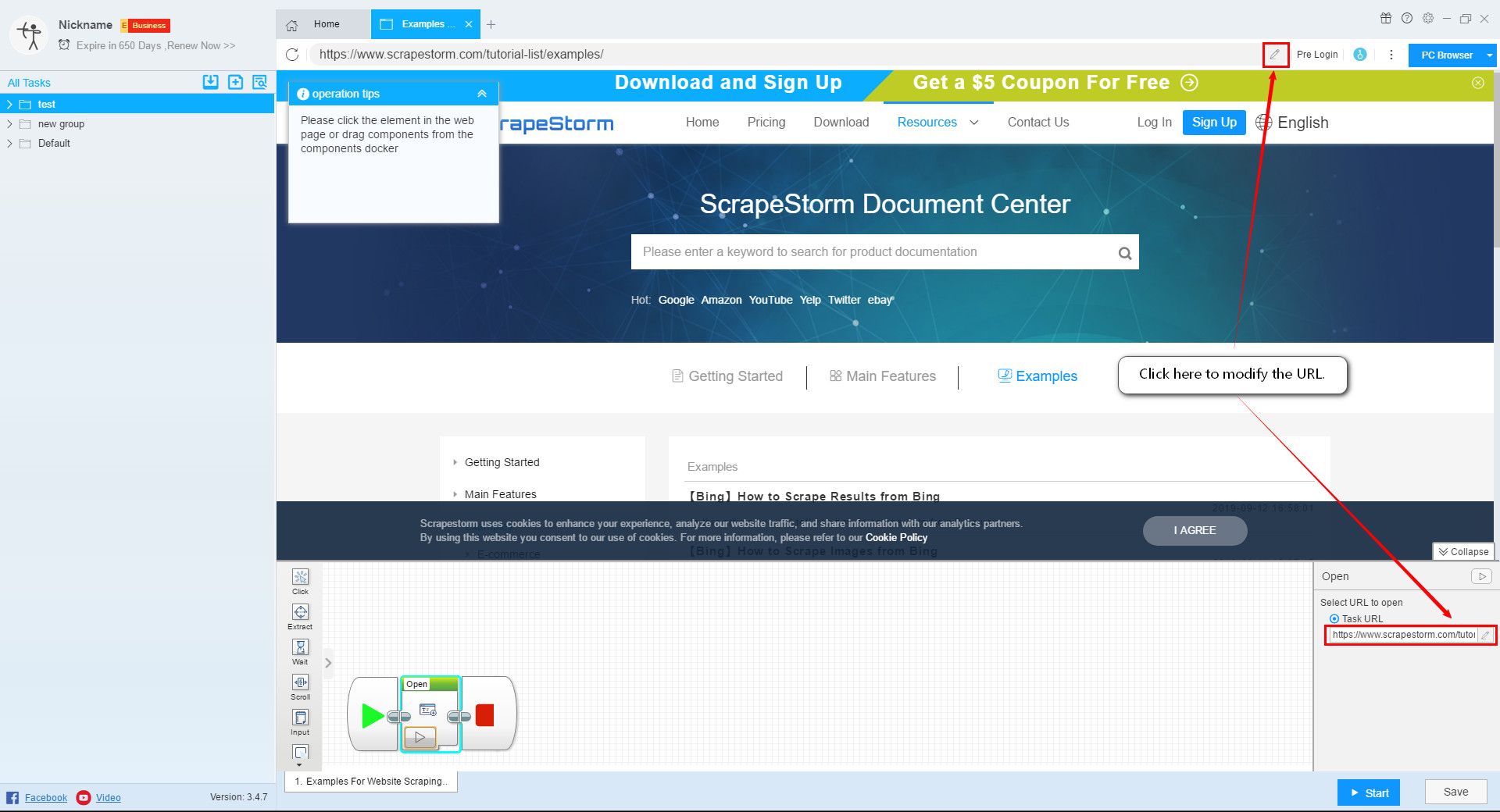

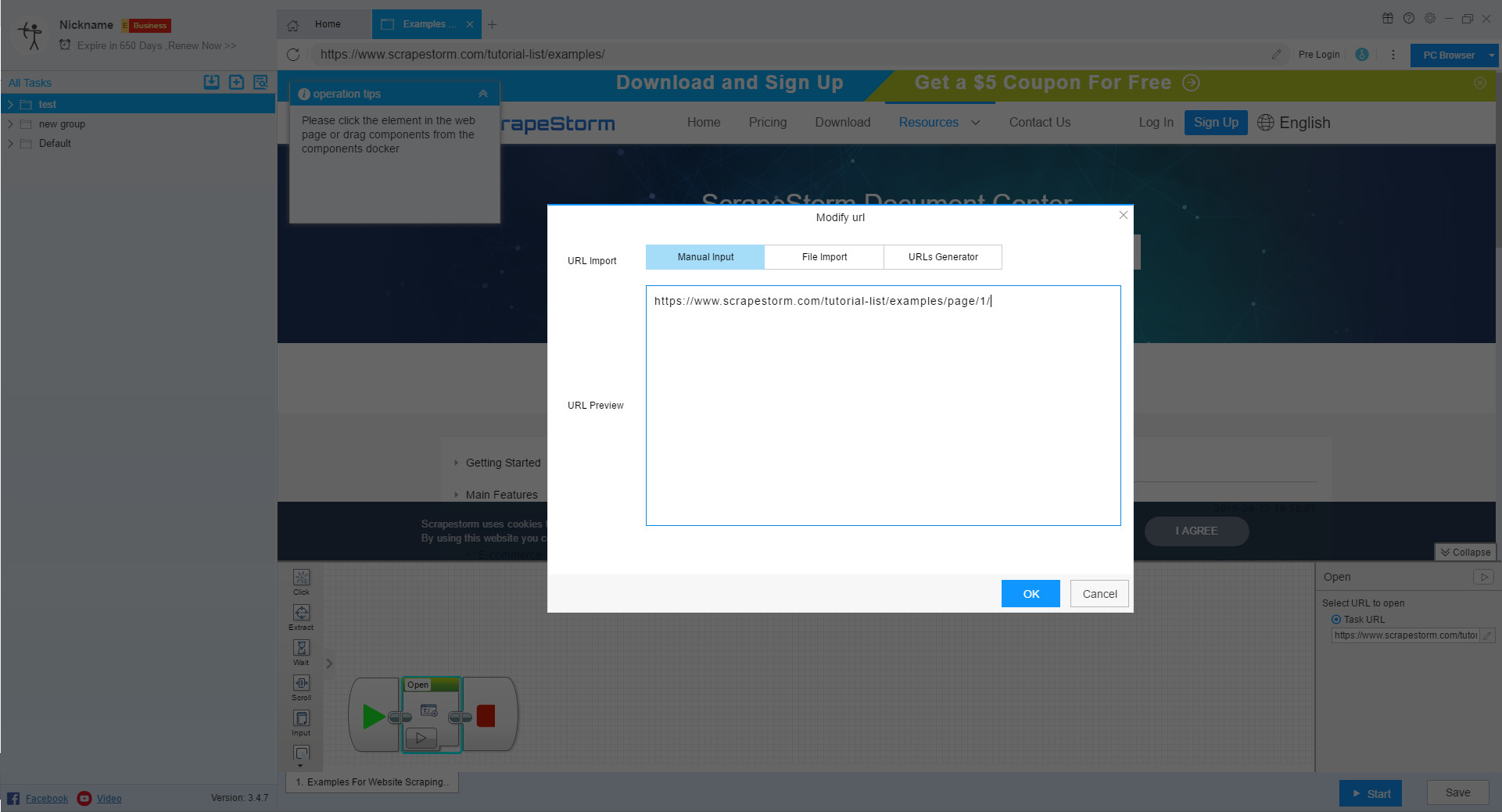

2. Edit URLs

Click here to edit the URL. If you have more than 200, please modify the local file directly.

If you are importing a URL from a local file, the changes here will not affect the local file.

For more details, please refer to the following tutorial:

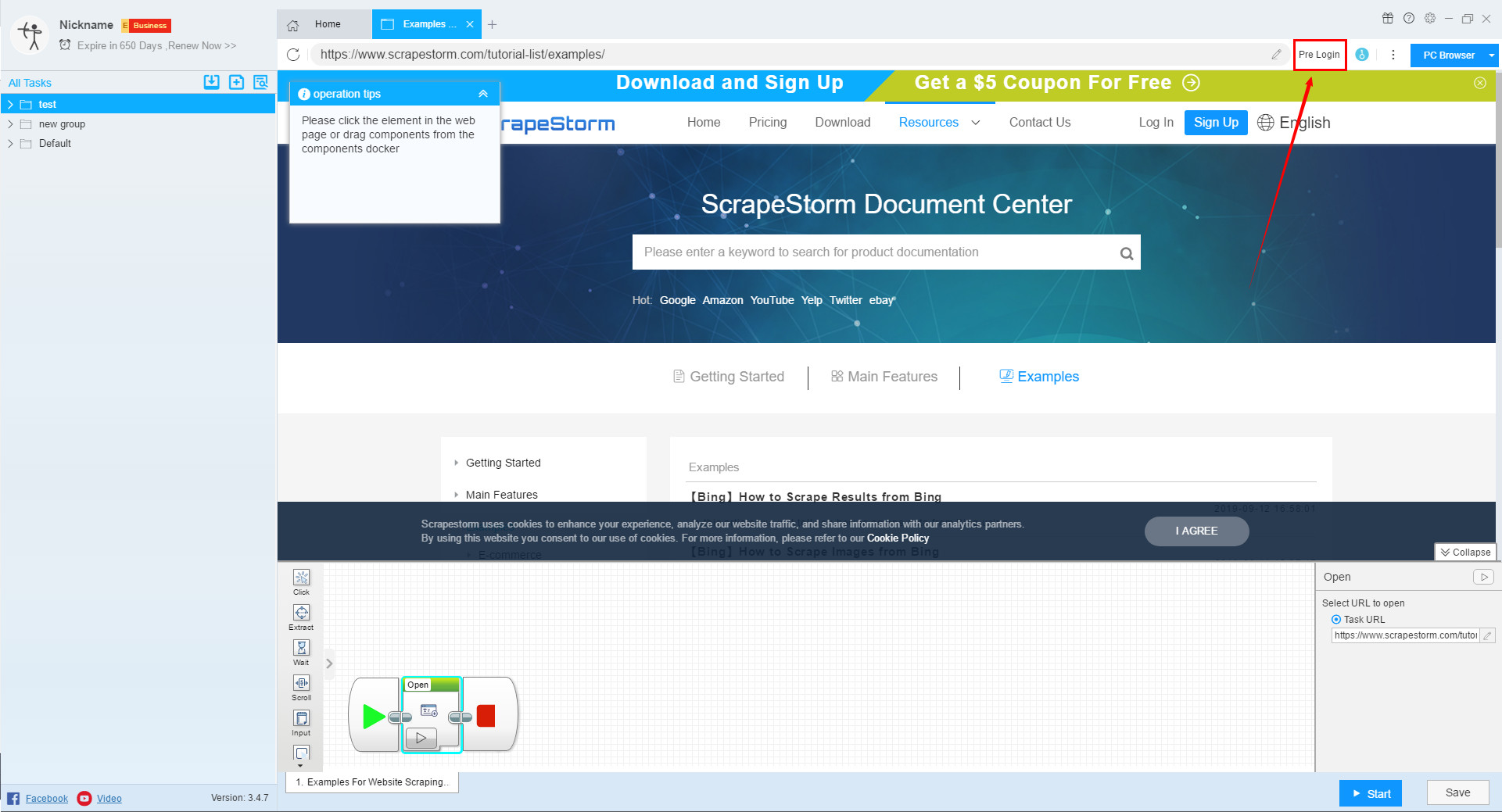

3. Pre Login

When you encounter a webpage that requires login, you can click this button to use the Pre Login function.

For more details, please refer to the following tutorial:

How to scrape web pages that need to be logged in to view

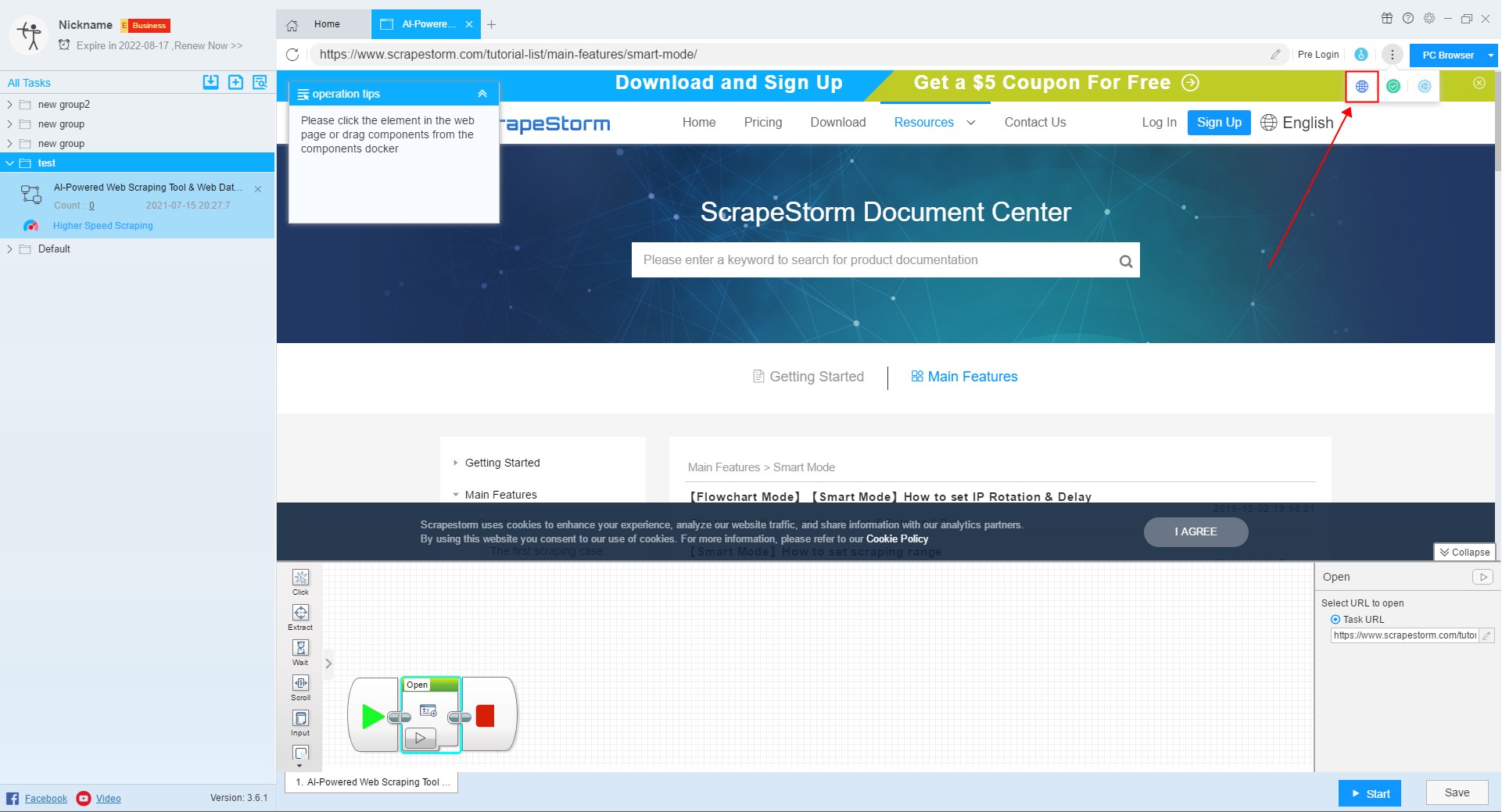

4. Solve Captcha

When you encounter captcha while editing a task, you can click this button to use the Solve Captcha function.

5. Open Proxy

When you encounter captcha or other anti-climbing on the home page, you can use the switch proxy function in addition to the solve captcha function.

Click here to learn more about Open Proxy.

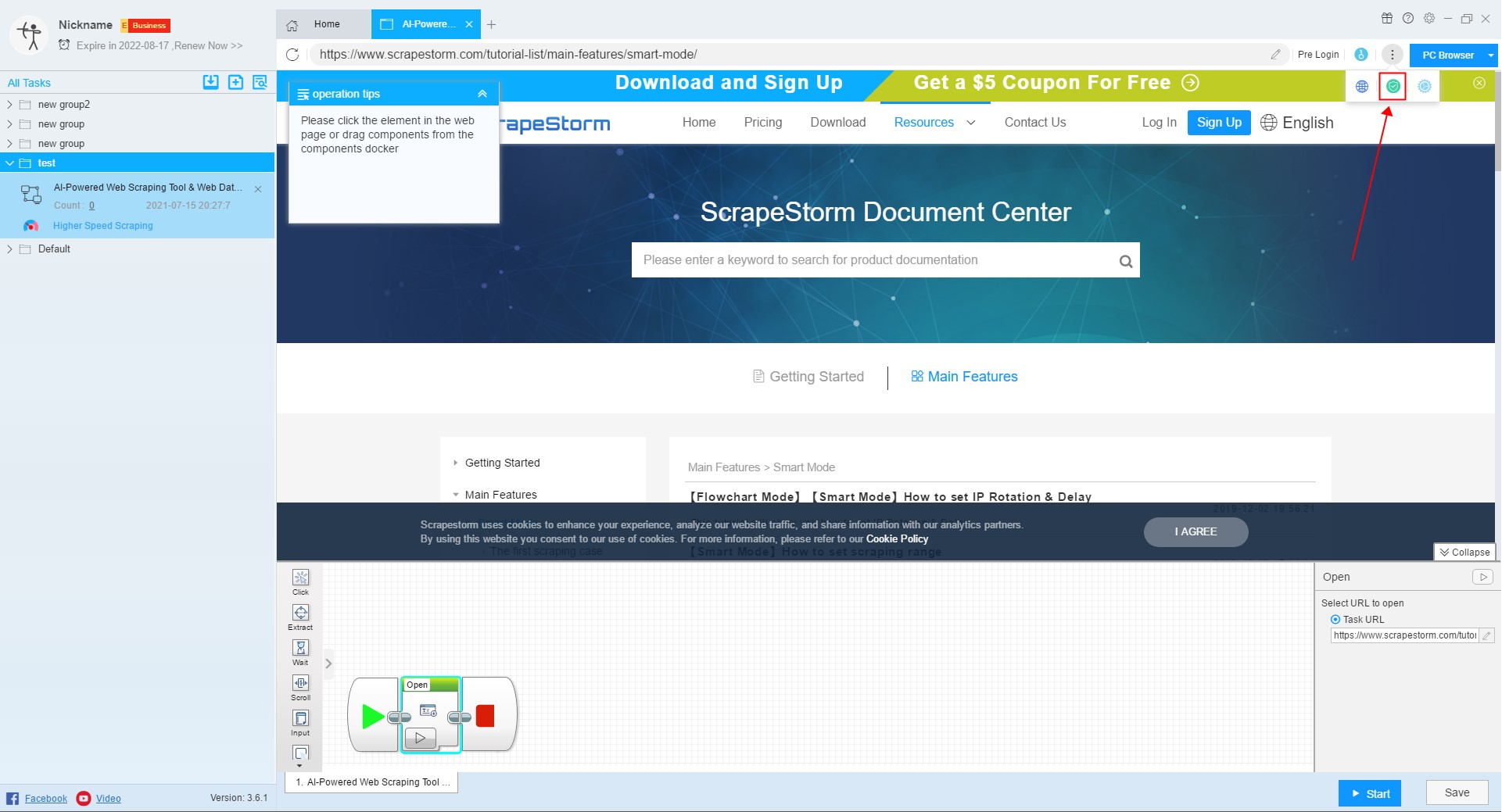

6. Web Security Option

You can try this feature when you encounter a web page exception, but be aware that opening this option may cause some content on the page to not be scraped (such as content in an iframe).

7. Advanced Settings

You can monitor pushStates and block URLs in the advanced settings.

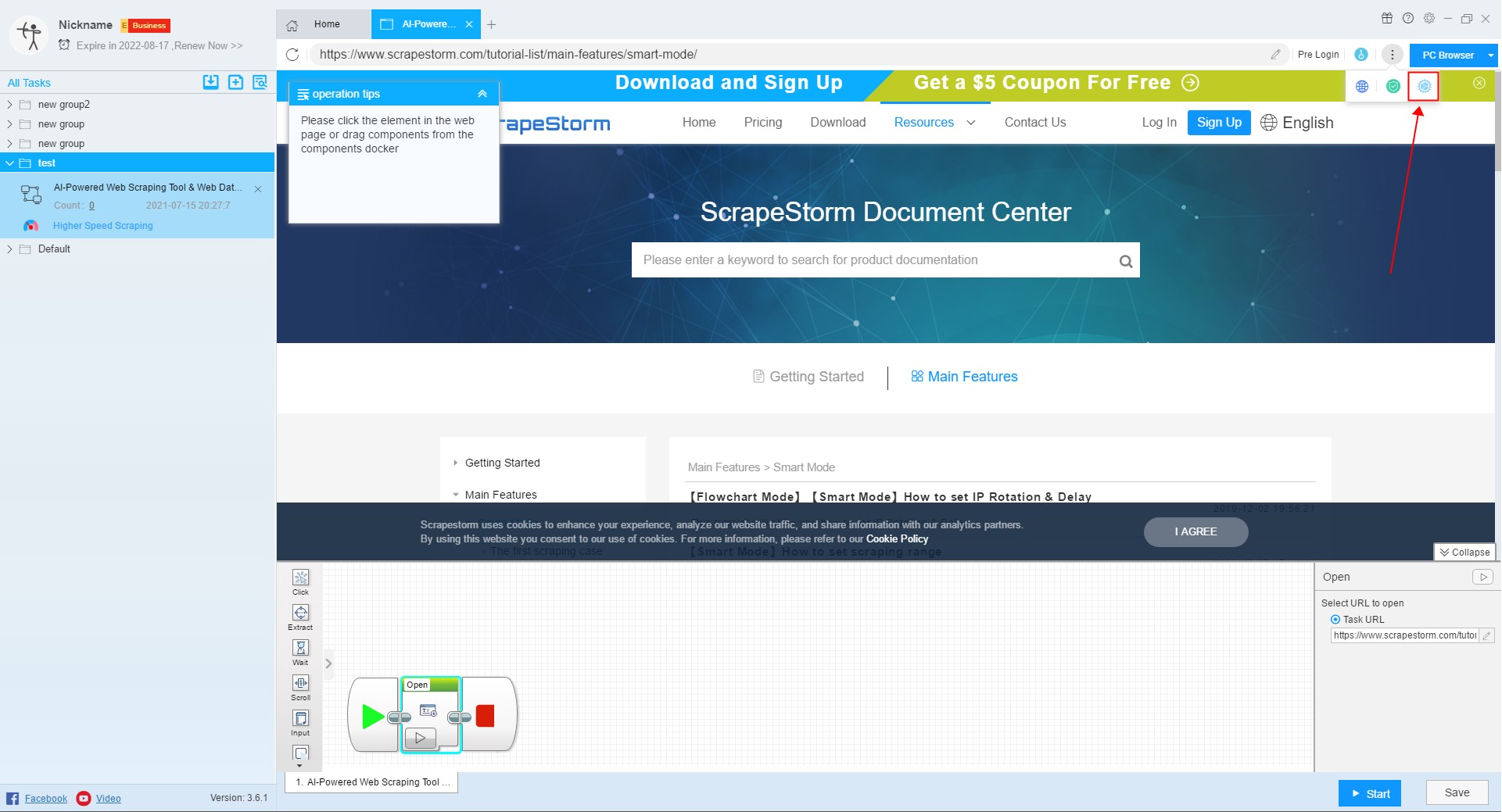

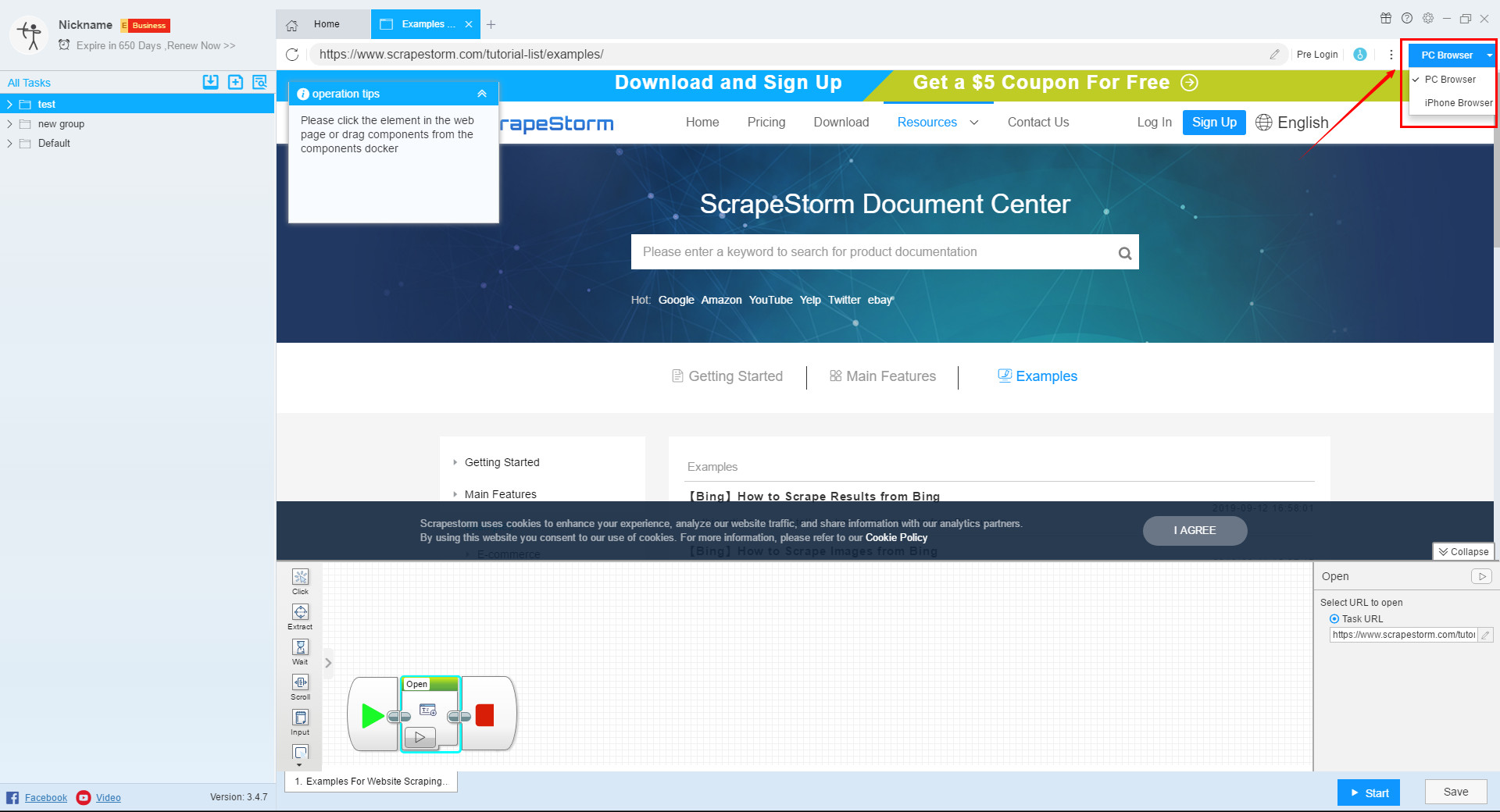

8. Switch Browser

Some webpages display different content on the computer and on the mobile phone. The software generally scraps the webpage of the computer version by default. If the user wants to scrape the webpage of the mobile version, it can be scraped by switching the browser mode.

For more details, please refer to the following tutorial:

What is the role of switching browser mode

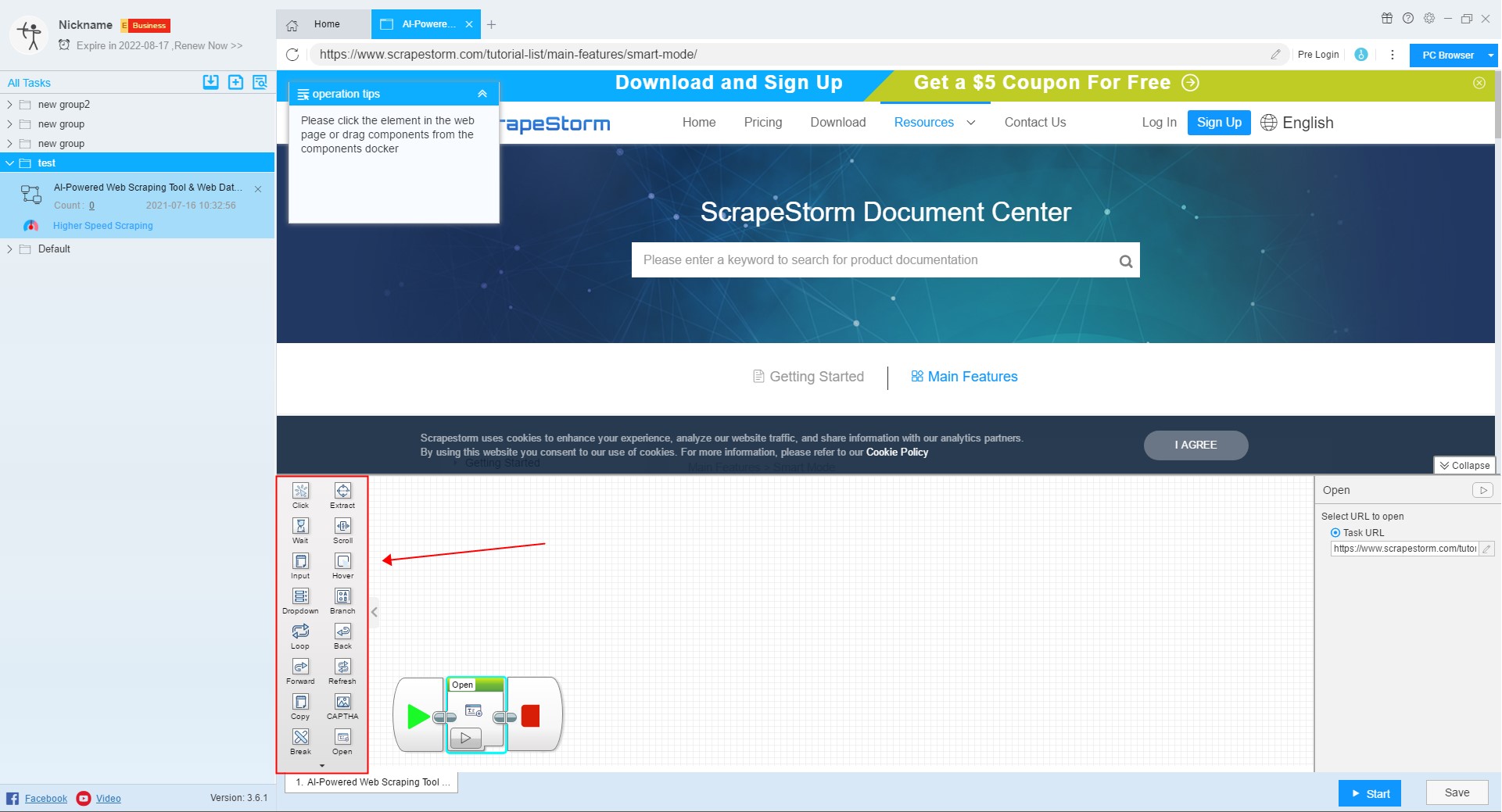

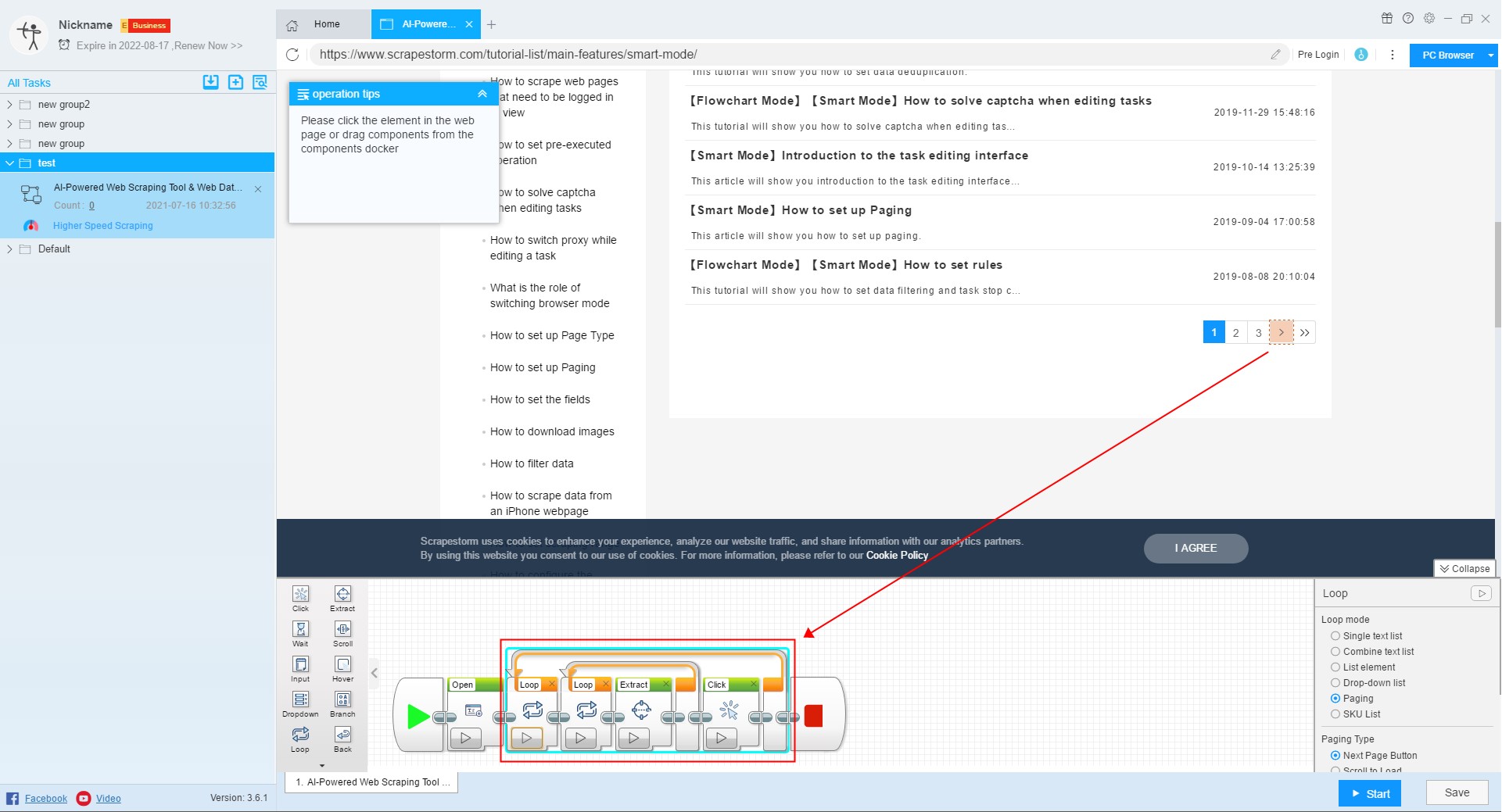

9. Flowchart Components

In the process of setting up tasks, you can use the corresponding components according to your needs.

For more details, please refer to the following tutorial:

Introduction to flowchart components

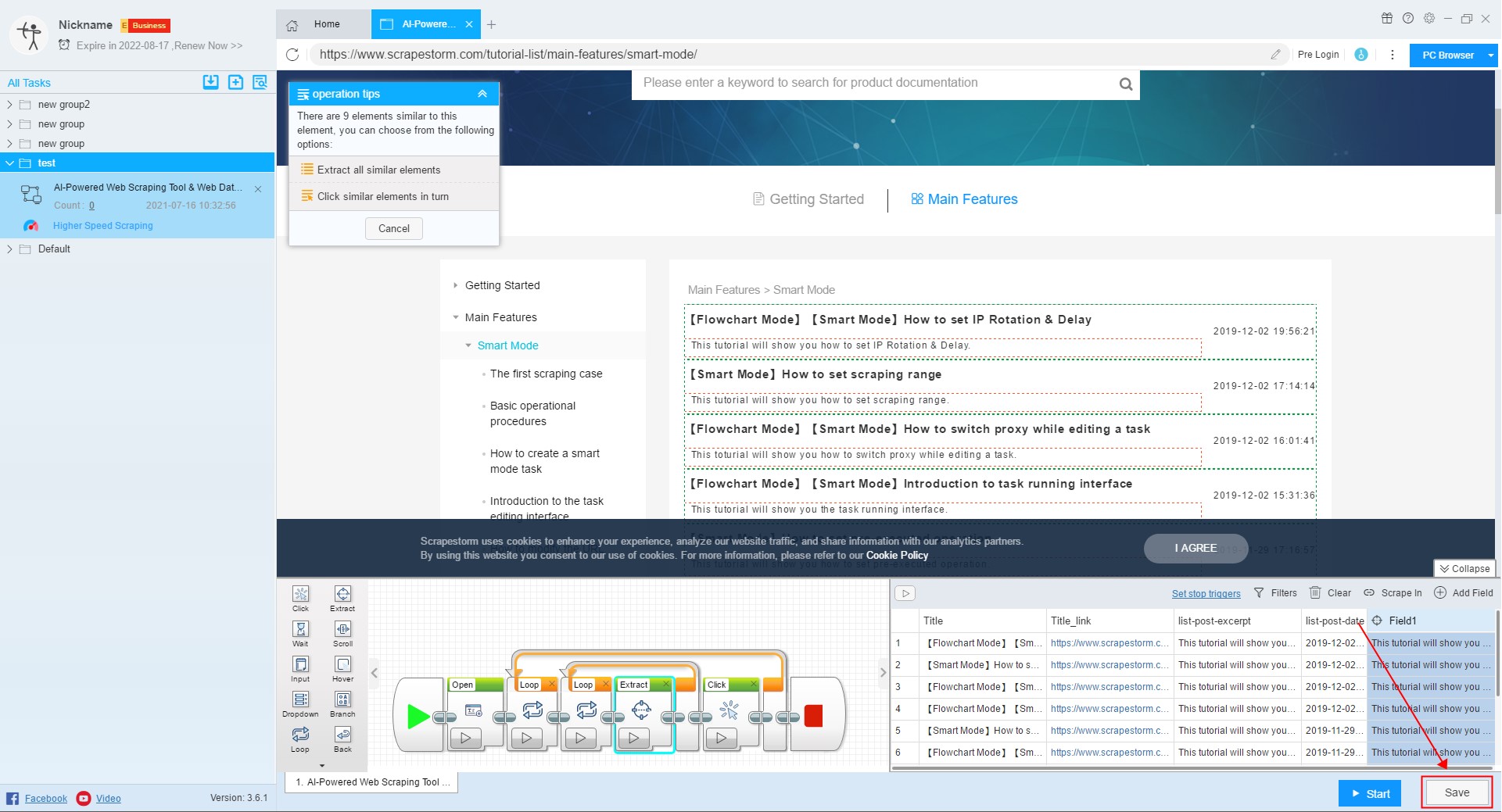

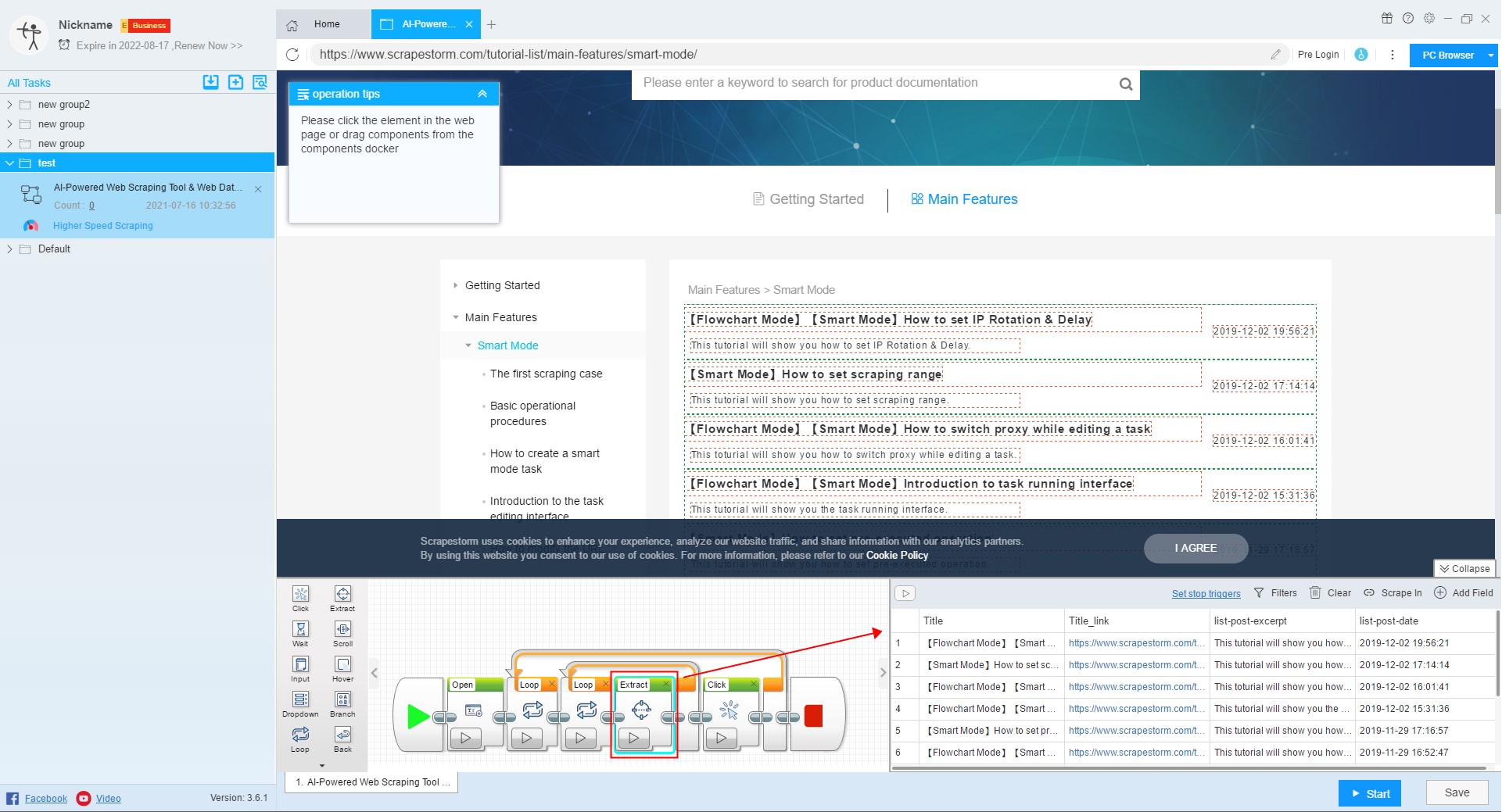

10. Application of Extract component

Extract component is the most basic component during the setup task.

For more details, please refer to the following tutorial:

11. Set up Paging

The software usually recognizes the paging automatically, you don’t need to set it separately.

For more details, please refer to the following tutorial:

12.Set stop triggers

You can use this function to set task stop triggers.

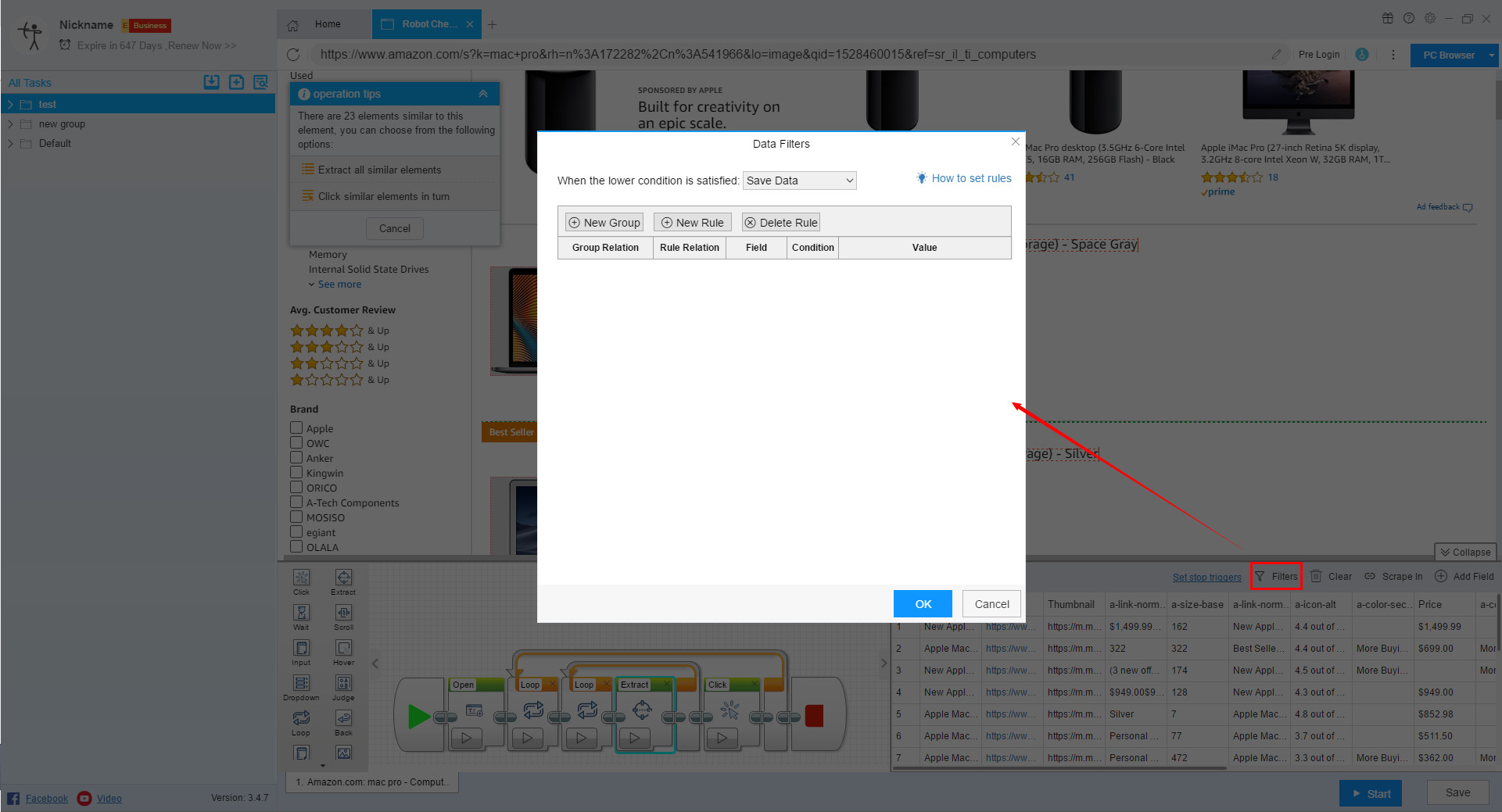

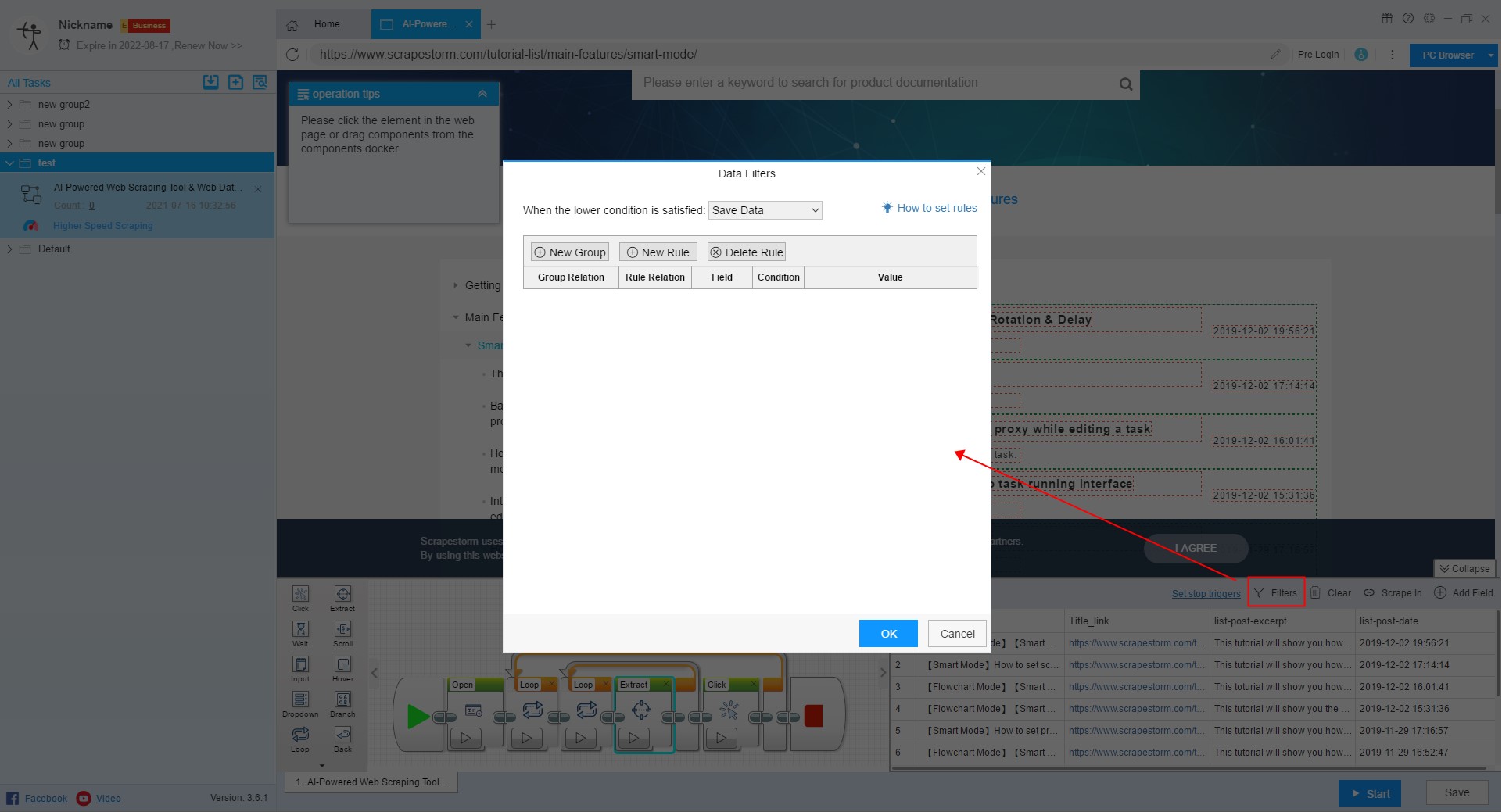

13. Filters

If you have data filtering requirements, you can click this button to filter the data.

For more details, please refer to the following tutorial:

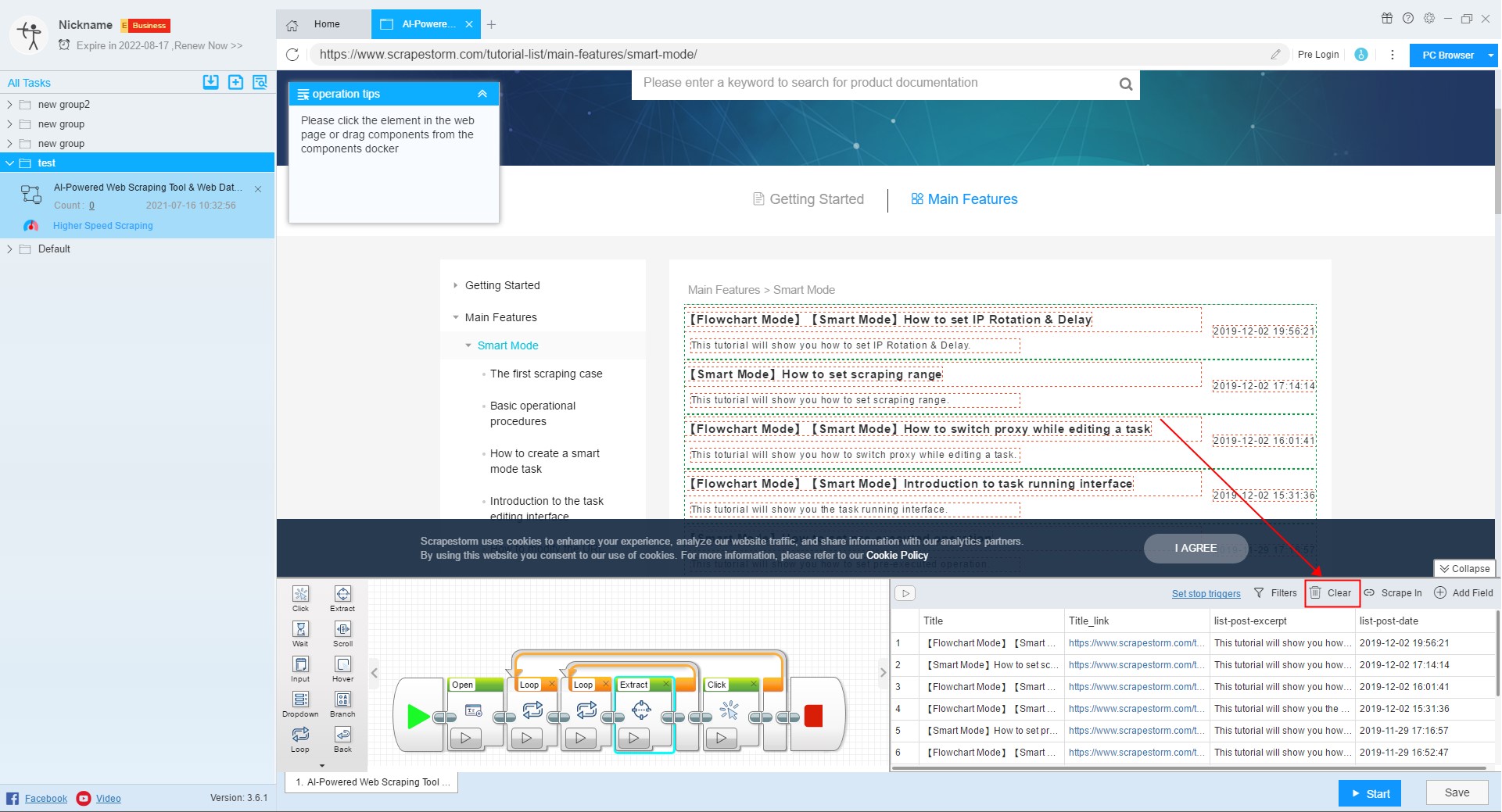

14. Clear

If you are not satisfied with the data automatically recognized by the software, you can use this function to clear all data, and then use the Add Field function to select the data you need.

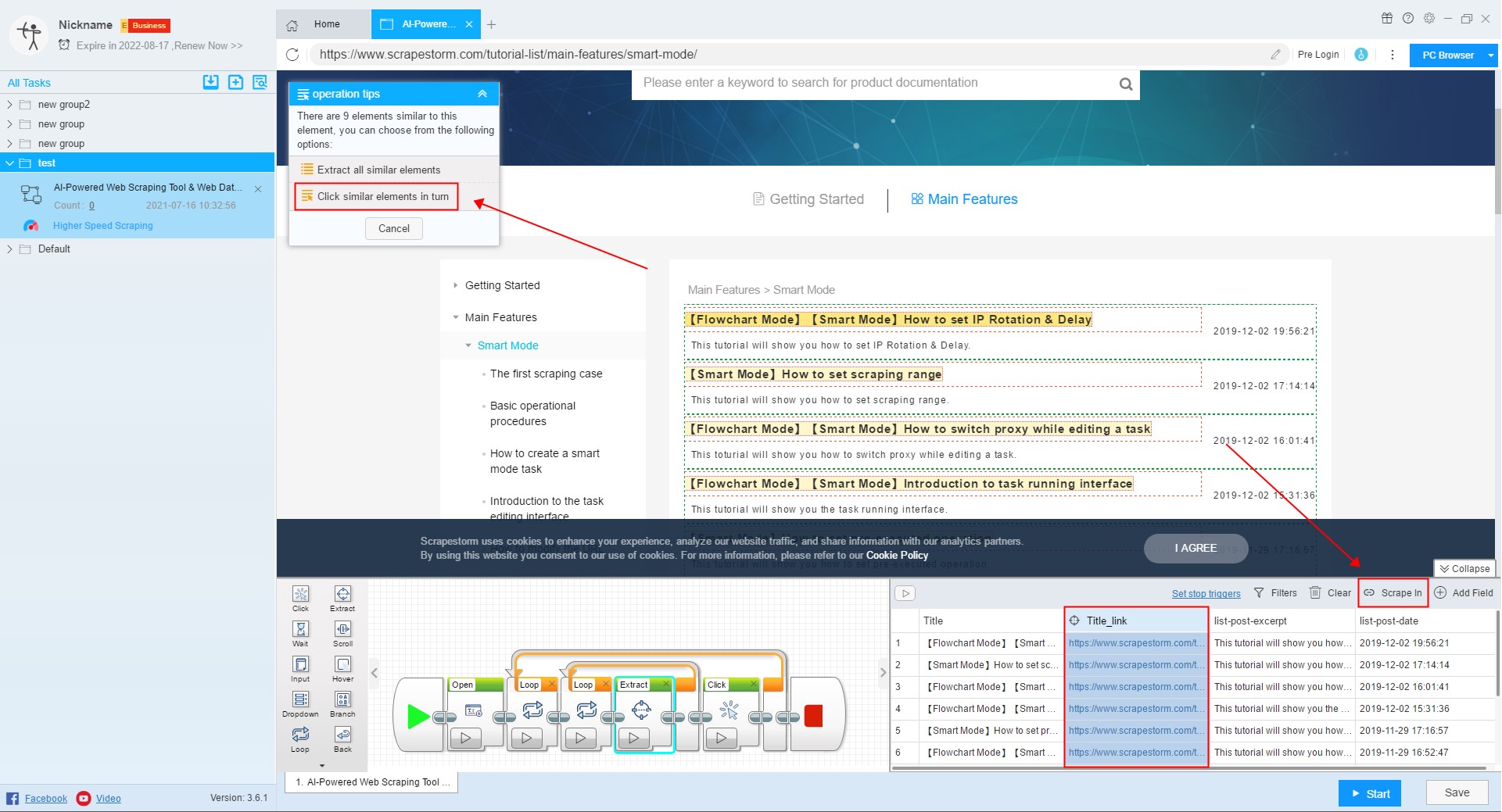

15. Scrape In

If you need to scrape the data on the detail page, you can use the Scrape In function to scrape.

For more details, please refer to the following tutorial:

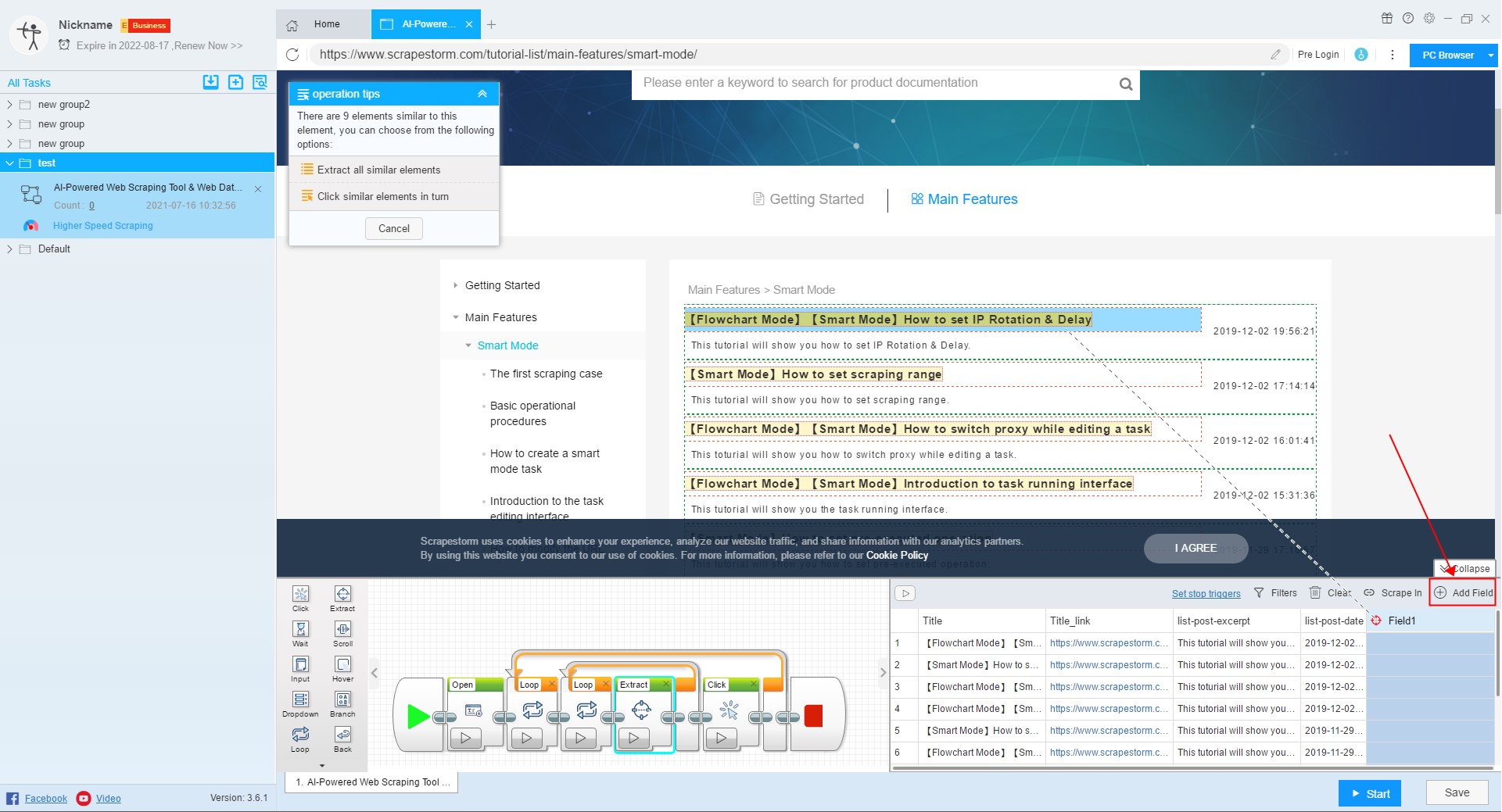

16. Add Field

You can use this feature if you need to add new fields.

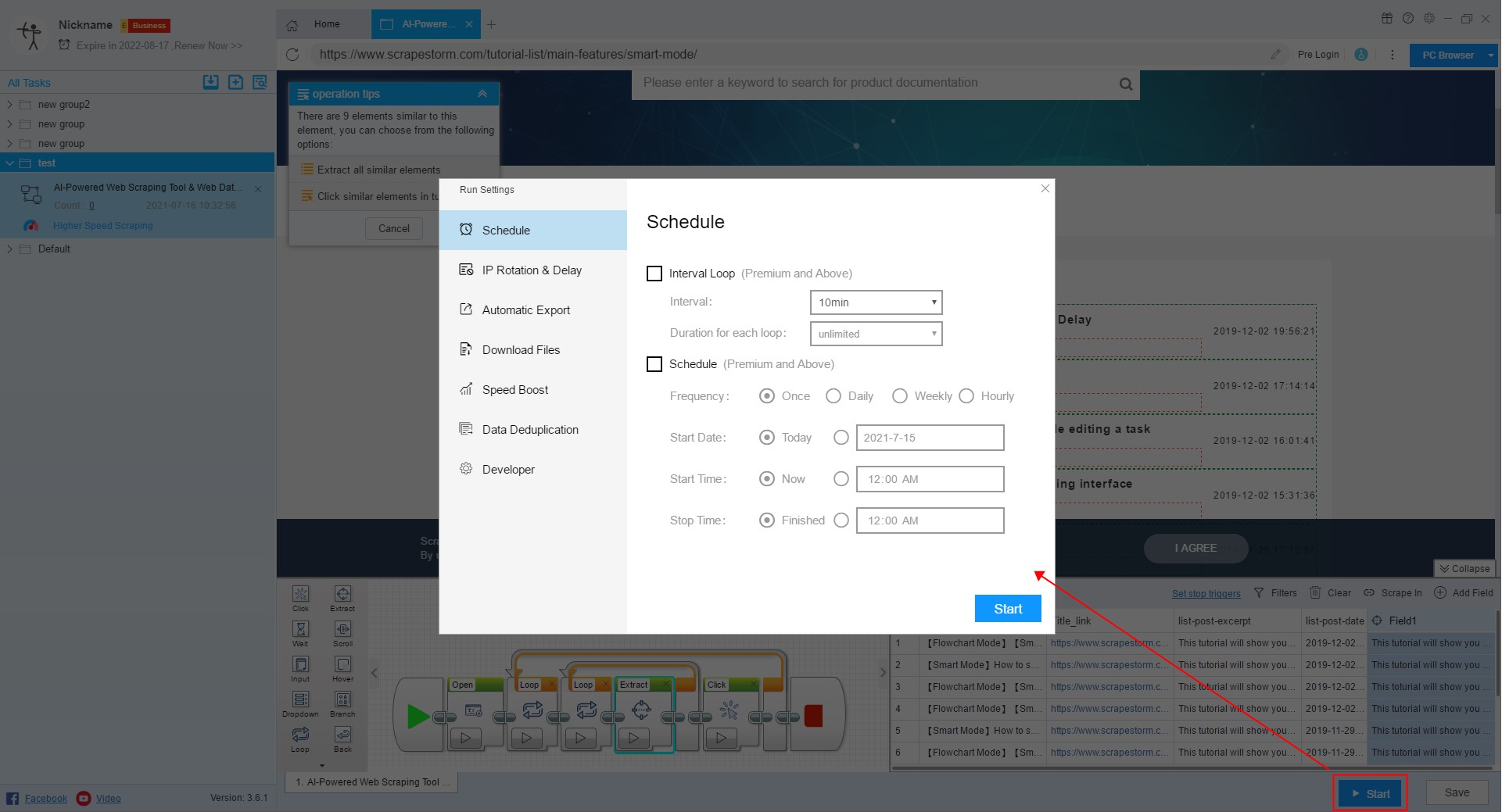

17. Start

After the task is set, you can click the Start button to jump to the run settings interface.

For more details, please refer to the following tutorial:

How to configure the scraping task

18. Save

In the process of setting up the task, in order to prevent the setting conditions from being lost, you can click the Save button to save the task conditions, and click the Start button to also save the condition.