Scraping tools | Web Scraping Tool | ScrapeStorm

Abstract:Scraping tools, also known as data collection tools, are software or hardware devices used to collect and retrieve various types of data. ScrapeStormFree Download

ScrapeStorm is a powerful, no-programming, easy-to-use artificial intelligence web scraping tool.

Introduction

Scraping tools, also known as data collection tools, are software or hardware devices used to collect and retrieve various types of data. These tools can automatically or manually collect data from a variety of sources, including the Internet, local files, sensors, databases, and web services. Collection tools play an important role in areas such as data analysis, research, monitoring, and decision support.

Applicable Scene

Market researchers can use web crawlers and scraping tools to monitor competitors’ prices, product information, and customer feedback, allowing companies to adjust their market strategies. Social media monitoring allows you to use scraping tools to track brand mentions, user feedback, and trends to support social media marketing and brand management. Financial institutions use scraping tools to obtain financial information such as market data, stock prices, and exchange rates for investment decisions and risk management.

Pros: Scraping tools can automatically capture and organize large amounts of data, saving a lot of time and effort from manual data entry and processing. Automated collection reduces data entry errors and discrepancies and improves data quality.

Cons: If the source data is inaccurate or inconsistent, scraping tools can automatically retrieve incorrect or inaccurate information. Collecting large amounts of data can involve privacy and security risks and requires careful handling of sensitive information. Regular maintenance and updates are required to ensure the stability of the scraping tool’s performance and functionality.

Legend

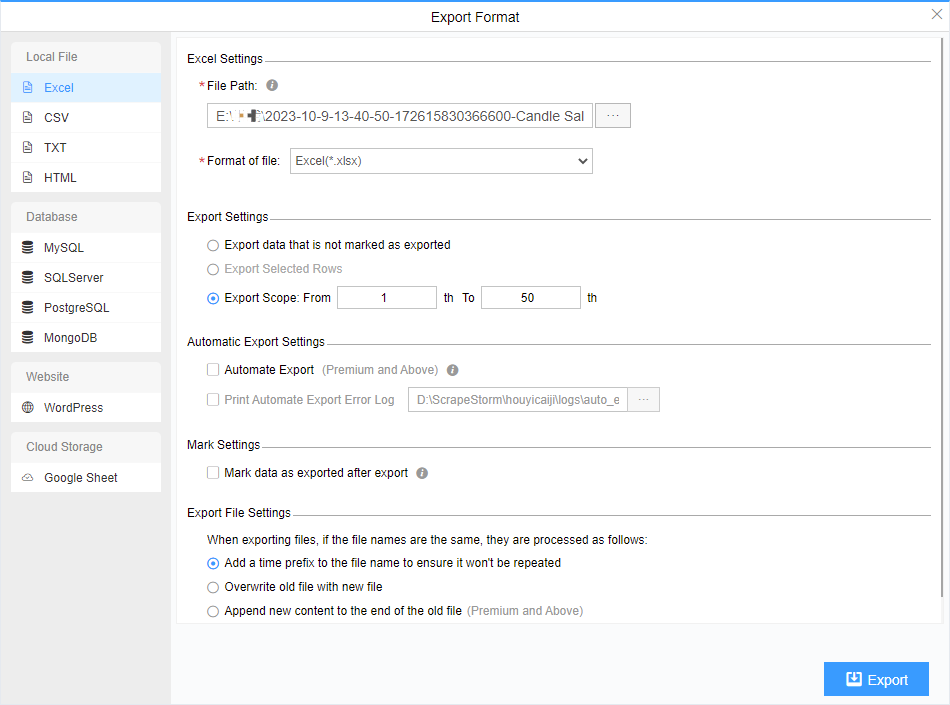

1.ScrapeStorm interface.

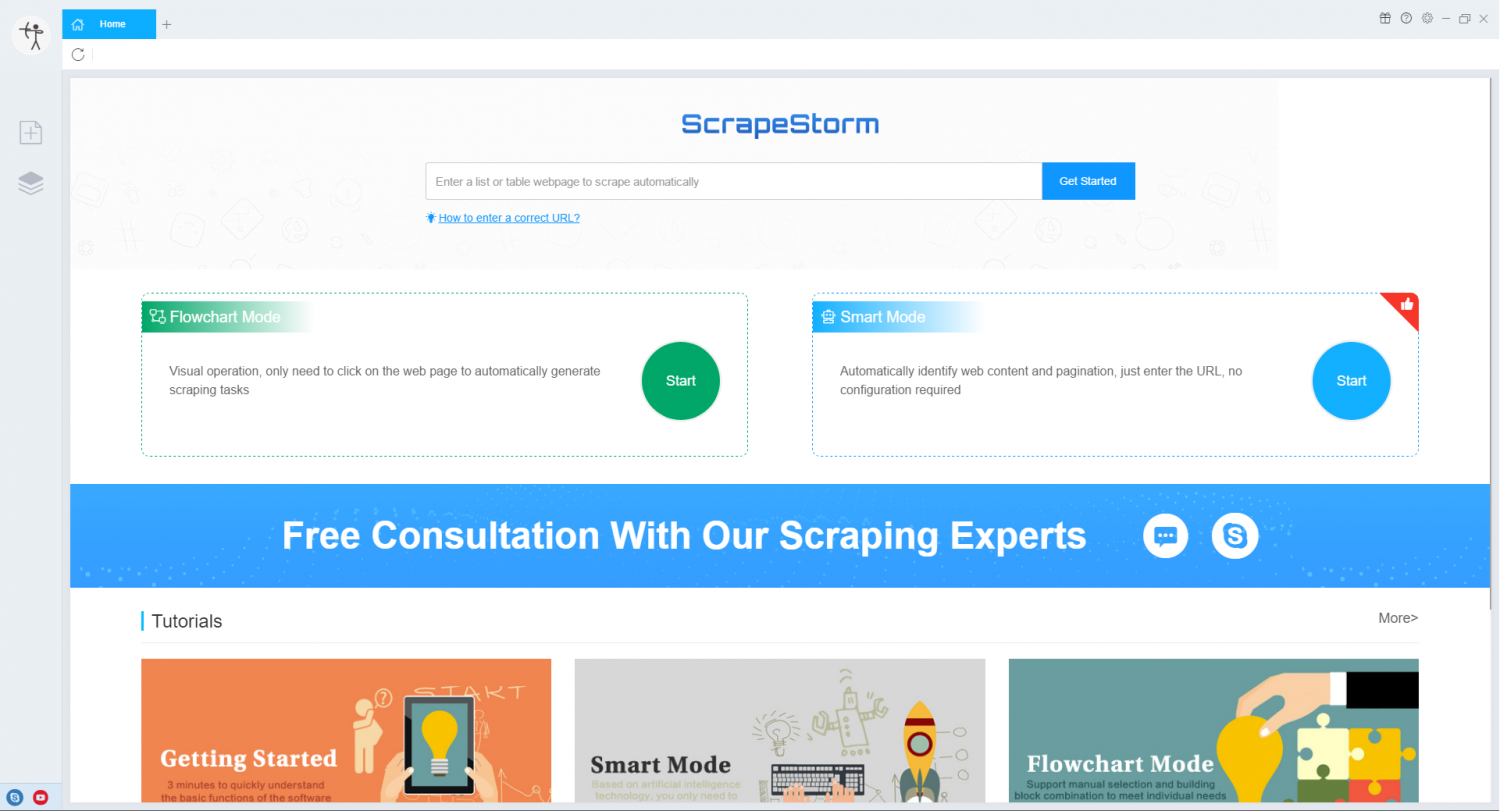

2. ScrapeStorm export interface.